Whether it is music, speech, screams, or environmental sounds, we make sense of sounds. It seems trivial because it happens so rapidly and efficiently for most of us but its failure has serious consequences. To understand how making sense of sounds works (or does not work), I focus on different levels of analysis (à la Marr). Understanding the neurobiological mechanisms underlying the cognitive processes at play to make sense of sounds requires the definition of both the activities themselves (i.e., what does the system do/use and what is it good for) and the processes involved in performing such actions (i.e., how does it do it).

Concretely, my daily activities turn around the acoustic description of categories (or mental representations of something abstract, such as correctness, beauty, communication of intention) and how they are processed.

Here are some examples of current research projects:

Sound processing

Categorisation of sounds

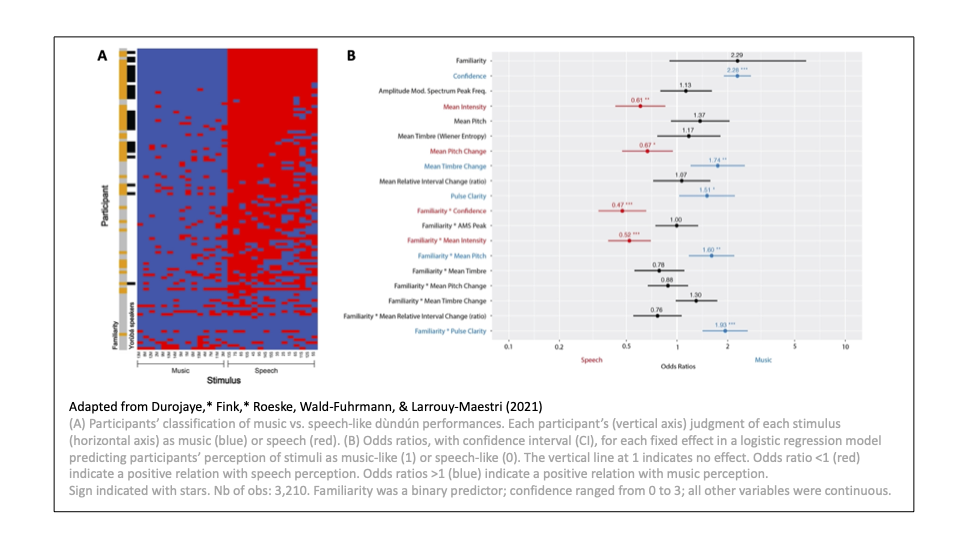

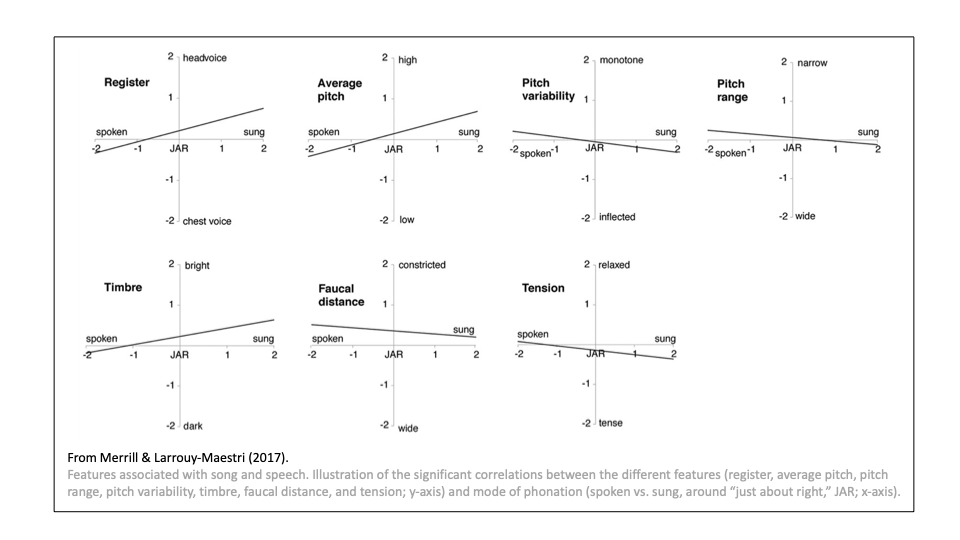

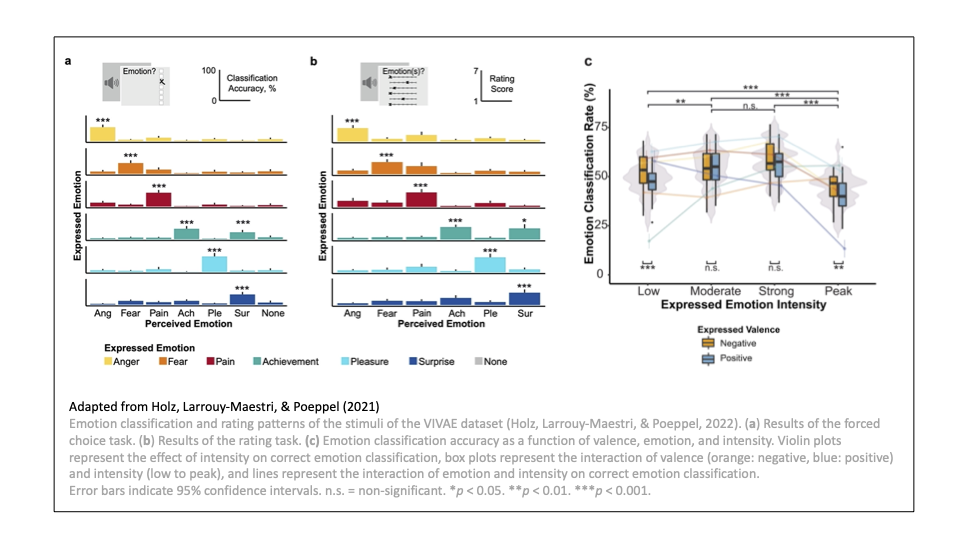

Although we quickly identify sounds as music or speech or scream, how we form such abstract categories is not so clear. By examining ambiguous material, such as sprechgesang (Merrill & Larrouy-Maestri, 2017), or performances from a West-African talking drum capable of speech surrogacy (Durojaye’’, Fink’’, Roeske, Wald-Fuhrmann, & Larrouy-Maestri, 2021), we clarified the association between music and speech categories and certain acoustic features, as well as the role of familiarity with the material. On the other hand, observing the perception of non-verbal vocalisation informed us about the ambiguity of certain categories (Holz, Larrouy-Maestri, & Poeppel, 2021).

Together with Lauren Fink, Madita Hörster, and Melanie Wald-Fuhrmann, we try to put together the pieces of the puzzle and figure out how we manage (or not) to categorise sounds.

Auditory sequence processing

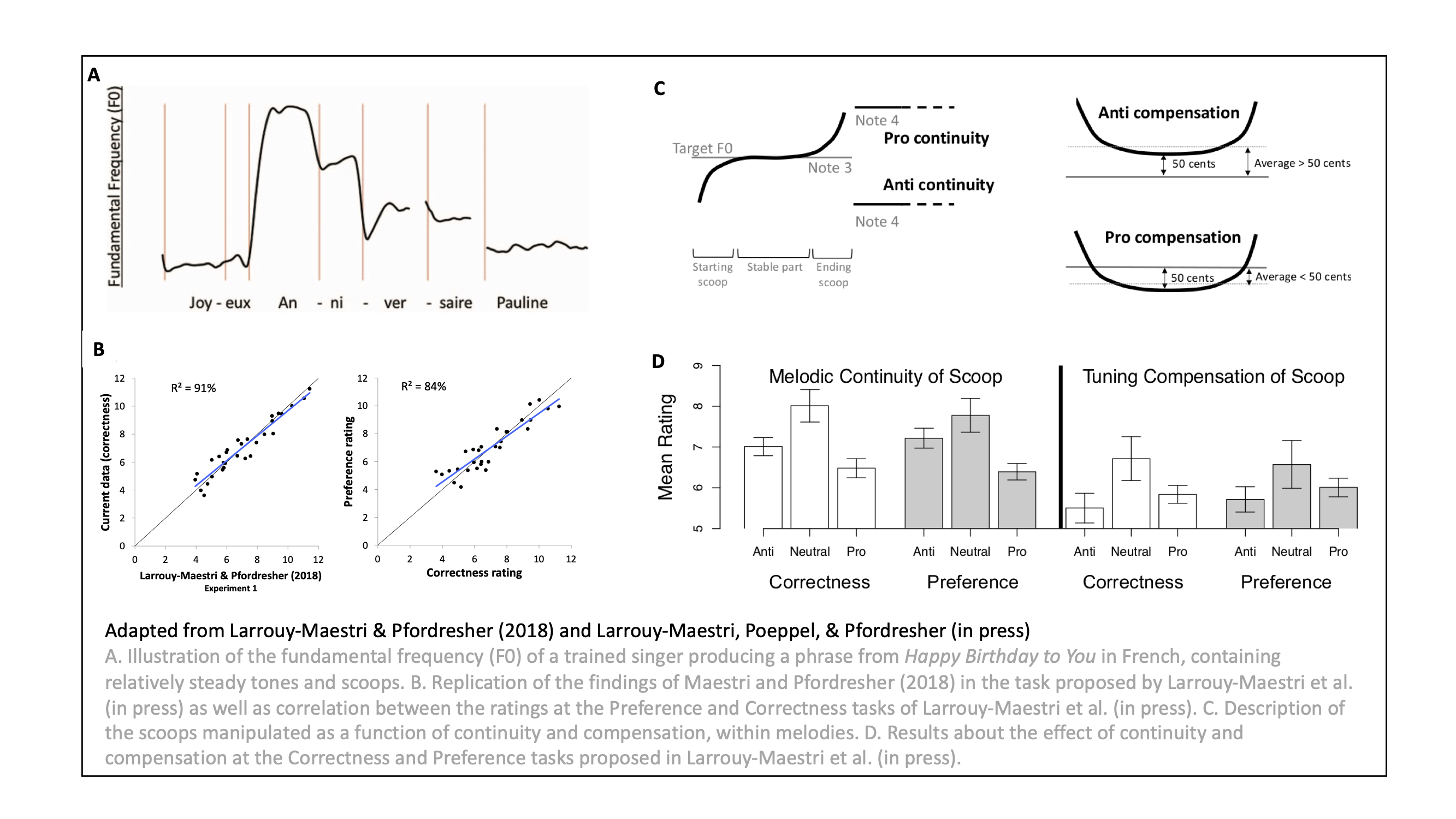

Music, like speech, can be described as a continuous stream of sounds that is parsed in units of different length. While tones are usually defined as the smallest discrete unit in Western music, we recently provided empirical evidence for the specific role of smaller units in music: scoops, which are small dynamic pitch change at the start or end of sung notes within a melody (Larrouy-Maestri & Pfordresher, 2018, Larrouy-Maestri, Poeppel, & Pfordresher, in press). Together with Xiangbin Teng and David Poeppel, we focused on longer sequences and identified a neural signature that reliably tracks musical phrases (>5 seconds) online.

Recently joined by Lea Fink and Zofia Hobubowska on this project, we further explore how such signatures contribute to music listening behaviour/enjoyment.

Music

Perception of correctness

Listeners can easily say if a singer sounds in tune or out of tune (Larrouy-Maestri et al., 2013, 2015), with some tolerance (Larrouy-Maestri, 2018). However, as is true for several types of judgments (e.g., beauty or obscenity), the foundation of the categorization of performances as “correct” remains unclear. This project examines what ‘correctness’ means in different musical contexts and dimensions (pitch and time), as well as the cognitive processes behind such categorization.

To do so, we investigate auditory processing of manipulated sequences with methods from psychophysics, physiology, and electrophysiology, and focus on potential listeners’ profiles.

From correctness to preferences

Previous studies showed that lay and expert listeners share similar definitions of what is “correct” when listening to untrained (Larrouy-Maestri, Magis, Grabenhorst, & Morsomme, 2015) and trained singers (Larrouy-Maestri, Morsomme, Magis, & Poeppel, 2017), or to their own performances (Larrouy-Maestri, Wang, Vairo Nunes, & Poeppel, 2021). However, we usually don’t attend opera or pop concerts to evaluate the correctness of a performance, but rather to enjoy it.

With Camila Bruder, and the wise insights of Ed Vessel, David Poeppel, and Melanie Wald-Fuhrmann, we examine what “preference” means when listening to sung performances, and explore the roots of such aesthetic experience.

Language/communication

The tone of the voice carries information about the emotional state or intention of a speaker. Whereas the nature of acoustic features of contrasted prosodic signals has attracted a lot of attention in the last decades (particularly since Banse & Scherer, 1996), the communication of emotions/intentions remains poorly understood. Also, most listeners seem to share the ‘code’ (or interpret adequately a prosodic signal) to access emotions/intentions of speakers; however, misunderstandings easily occur. This project focuses on the cognitive processes involved in prosody comprehension.

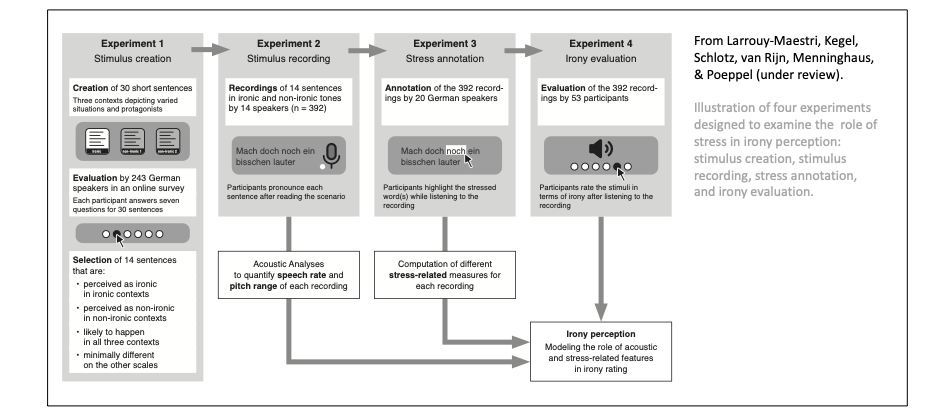

More specifically, we examine the categorization of utterances based on the integration of dynamic acoustic information (in the context of irony, affective, or intention communication), with methods from psychophysics and electrophysiology.

Current collaboration with

Melanie Wald-Fuhrmann

David Poeppel

Daniela Sammler

Lauren Fink

Xiangbin Teng

Lea Fink

Marc Pell

Winfried Menninghaus

Simone Dalla Bella

Pol van Rijn

Vanessa Kegel

Claire Pelofi

Youssef Amin

Fredrik Ullén

Lara Pearson

Current supervision of

Camila Bruder

Madita Hoerster

Zofia Hobubowska